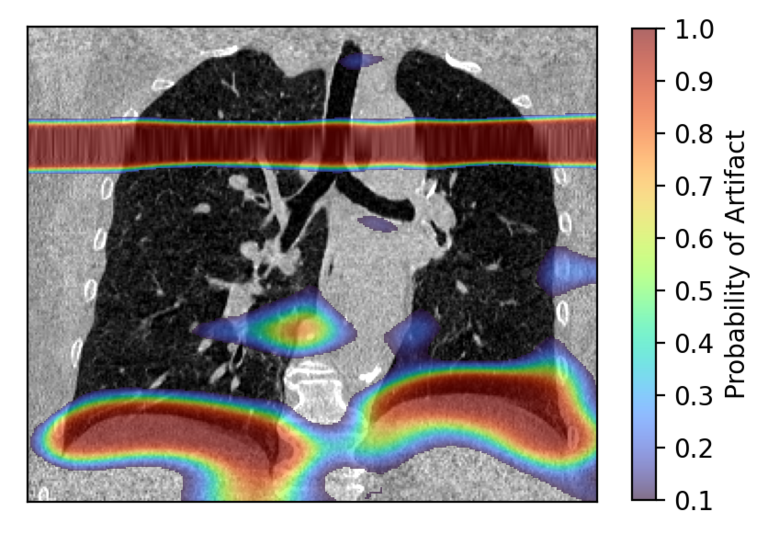

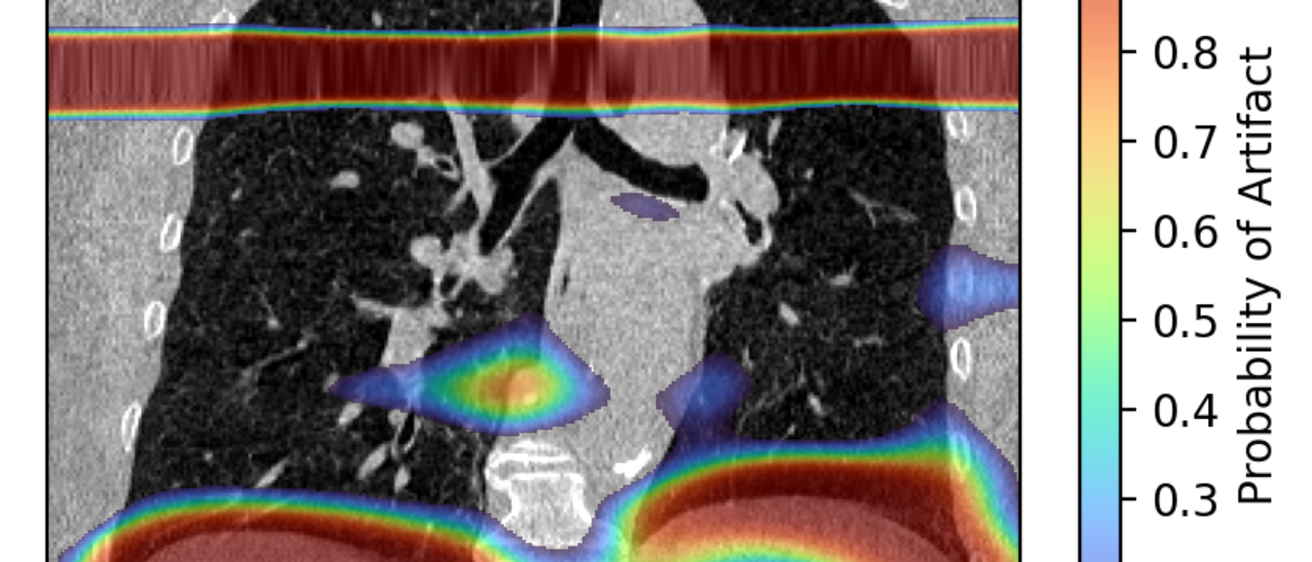

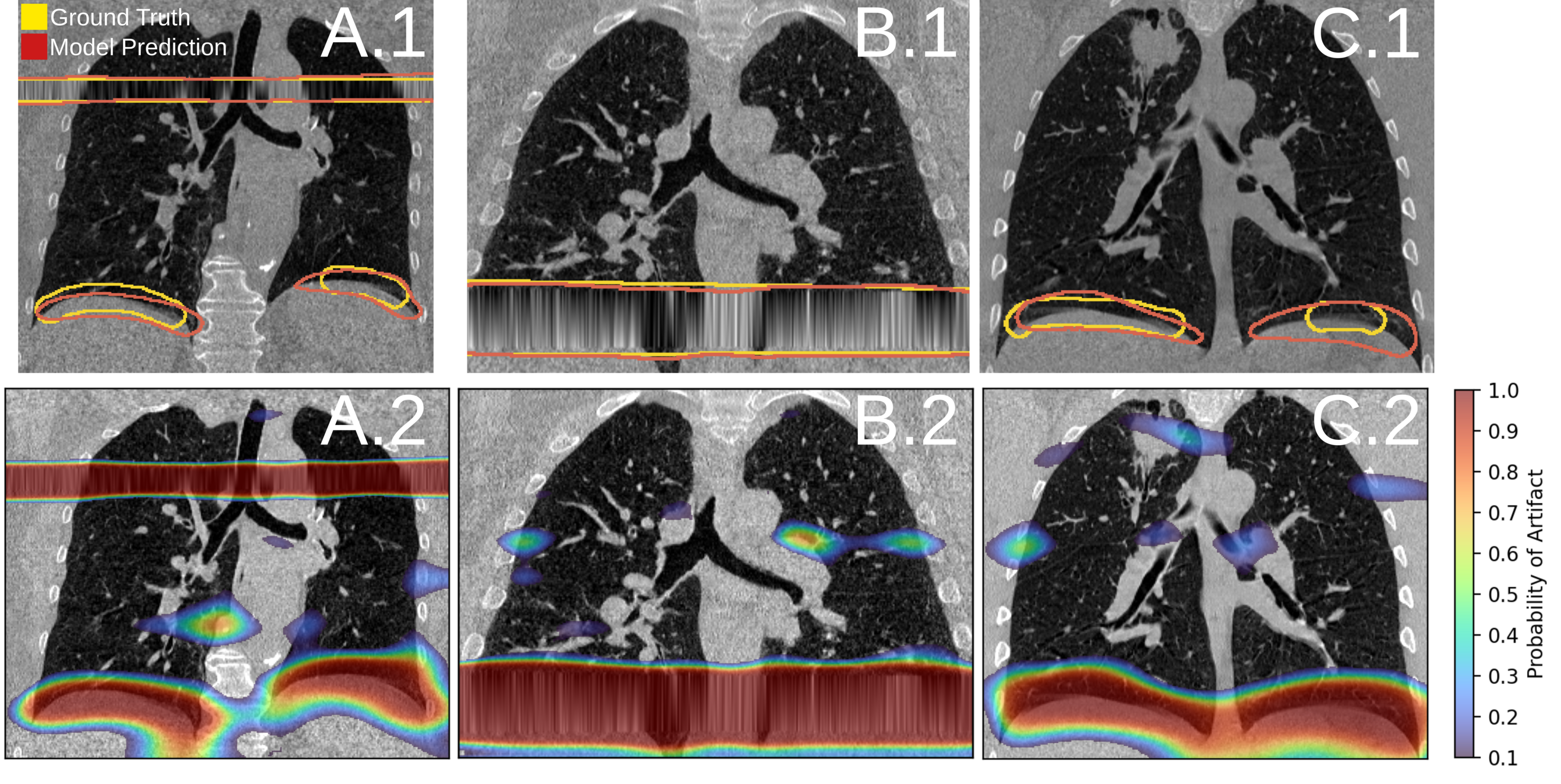

The raw output of the artifact detection network is a probability map of the most likely locations an artifact is present.

Detecting and Correcting Motion Artifacts in 4DCT Imaging to Aid Radiation Treatment Planning

Publications:

Background:

Lung cancer is the most common of all types of cancer. The National Cancer Institute estimates about 1 in 17 people will be diagnosed with lung cancer in their lifetime [1]. Radiation therapy is often used and has proven to be effective. However, it has its risks. Between 13 and 37% of patients who receive radiation therapy develop radiation toxicity [3]. This contributes to a 5-year survival rate of only 23% [2]. But how does radiation therapy even work?

High energy radiation is administered to the patient's body and targets the tumor. A beam of radiation encircles the patient and hits the tumor from many different angles. But, no matter what we do, the tumor is not the only thing irradiated there is a lot of collateral damage done to healthy tissue. To maximize radiation delivered to the tumor, a four-dimensional computed tomography (4DCT) scan is used to guide the beam of radiation.

4DCT is similar to a regular CT scan, but it images the patient over time. A single 4DCT scan is a sequence of 10 3D scans over the breathing cycle of the patient. This allows us to image the lungs as they breathe and gives a lot of spatial information about the tumor's location and shape inside the lungs. A tumor is not a static object in the lungs. It changes shape and position as the lungs expand and contract. With 4DCT, we can precisely track the tumor and reduce the amount of radiation delivered to healthy tissue.

Figure 1. GIF of 4DCT with a tumor in the right lung.

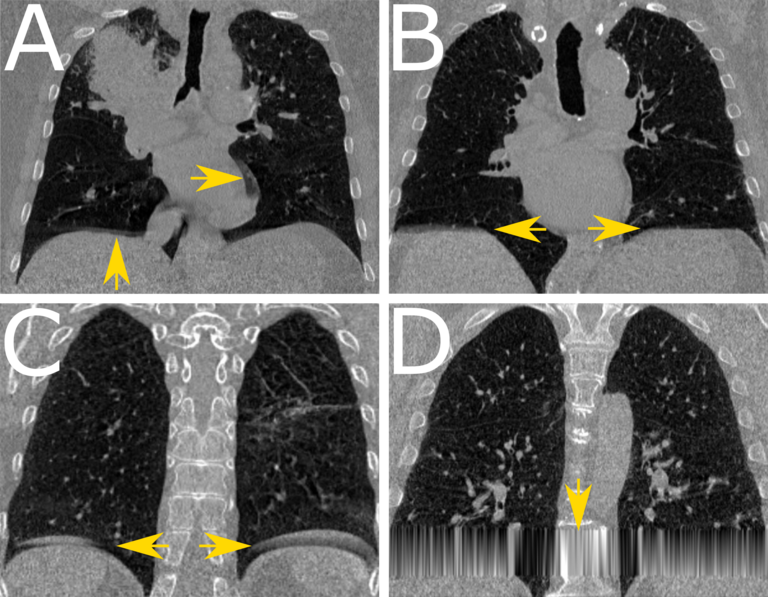

While 4DCT is much better than a static CT scan, it has its limitations. Irregular breathing during scanning creates glitches in the image acquired. We call these respiratory motion artifacts, or artifacts for short. Lung cancer patients are really sick people and often have difficulty controlling their breathing. Trying to get them to breathe at certain volumes and frequencies is difficult. As a result, respiratory motion artifacts are very common and reduce the anatomical accuracy in an image. To improve image resolution, we propose a deep learning network that can detect and correct motion artifacts in 4DCT images.

Methods:

We implemented deep learning in two main ways. The first is artifact detection where our network must accurately identify all types of artifacts in an image and predicts where the artifacts are located. The second is an artifact correction network that removes artifacts from the image and generates a prediction of what it thinks the anatomy should look like.

To create a model that performs artifact detection, we first build a dataset of CT data and artifact maps that tell the network what an artifact is and where it is located. The data is passed through the network, where the network extracts features about what the artifacts look like and where they are commonly found. Once the model is trained, it will produce a prediction of where it thinks an artifact is in a 4DCT image.

Results:

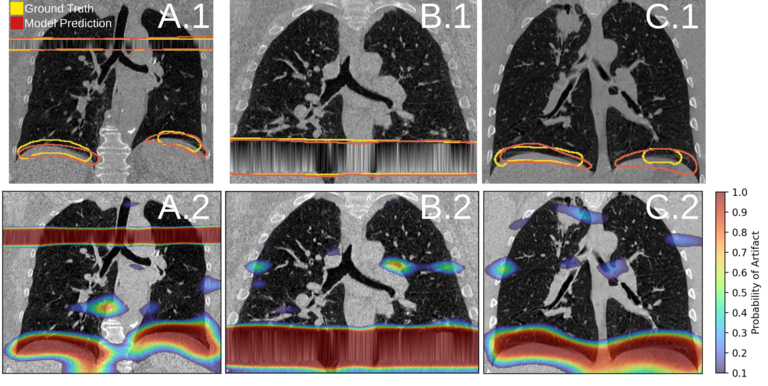

Figure 2 shows the general performance of the artifact detection model. For more information on this topic, please see the publication cited above.

Figure 2. Artifact detection network performance. [Carrizales, 2024]

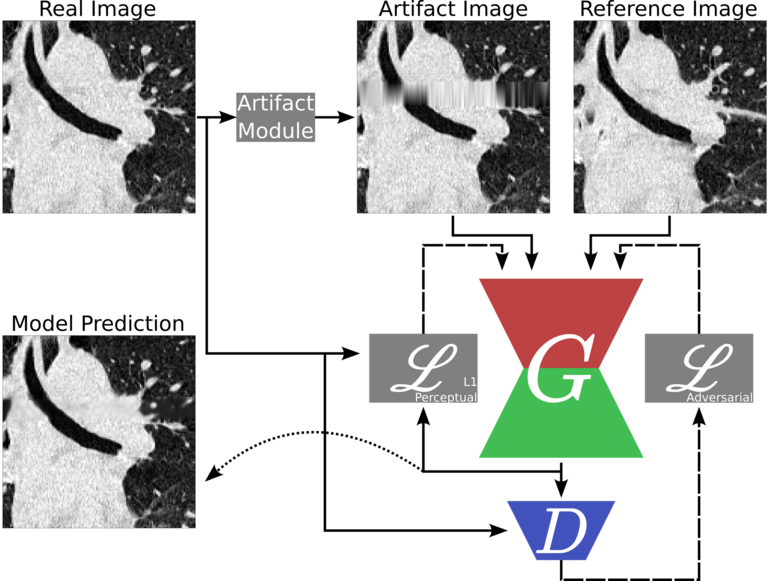

We developed a generative adversarial network for artifact correction in 4DCT images. Figure 3 shows the general flow for how this model was created.

Figure 3. Data pipeline for the GAN correction network.

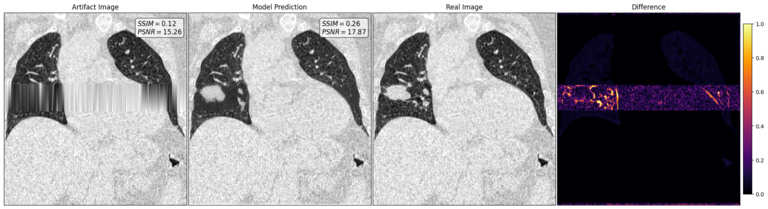

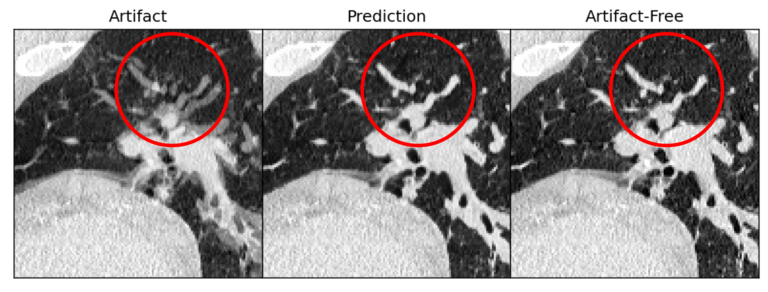

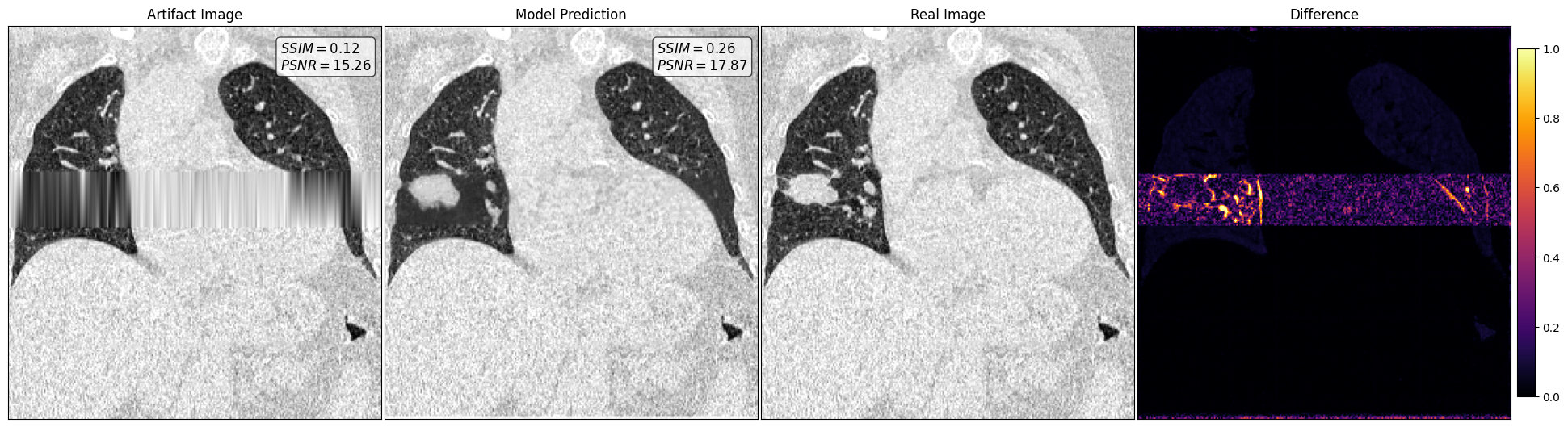

The model we developed is able to recover patient specific data that was previously lost due to the artifact. Figure 4 shows the how the model performs in the case of a patient with a tumor. In the artifact image, the tumor was lost and can no longer be seen. The correction model is able to replace the tumor back into the image. The difference image shows where the model is lacking compared to the real artifact-free image. However, the results are very promising.

Figure 4. Artifact correction network performance.

Conclusion:

How can our deep learning networks make an impact on the patient? Common practice in cancer centers is to take 2 4DCT scans every 3 months. In a year they will have received 80 3D scans in addition to the radiation they need to kill their cancer. 4DCT scans also use higher doses of radiation to image the patient. By correcting scans with artifacts, we can reduce this number by 50% and reduce the amount of overall radiation delivered to the patient. Additionally, artifact free scans improve anatomical resolution and allows physicians to target the tumor accurately. As a result, we will be able to reduce the long-lasting effects of radiation toxicity and improve patient survival.

References:

1. Cancer statistics: NIH National Cancer Institute Surveillance, Epidemiology and End Results website, https://seer.cancer.gov, released April 15, 2022.

2. American Cancer Society. 2022-Cancer-Facts-and-Figures; American Cancer Society: Atlanta, GA, USA, 2022.

3. G. Rodrigues, M. Lock, D. D’Souza, E. Yu, and J. Van Dyk, “Prediction of radiation pneumonitis by dose–volume histogram parameters in lung cancer—a systematic review,” Radiother. Oncol., 2004.

____________________________________________________________________________________________________________________________________________________________

This work was presented at the 2022 National GEM Consortium Conference in Phoenix, AZ. It was awarded Best Technical Presentation in the Early-PhD Category.

This work was presented at the 2022 Biomedical Engineering Society Conference in San Antonio, TX.

____________________________________________________________________________________________________________________________________________________________

For more information about deep learning in radiation therapy or how to join this project, please contact Joshua Carrizales.